Using Chinda LLM 4B with Ollama - Complete User Guide

🎯 Introduction

Chinda LLM 4B is an open-source Thai language model developed by the iApp Technology team, capable of thinking and responding in Thai with high accuracy using the latest Qwen3-4B architecture.

Ollama is a powerful command-line tool that allows you to easily run large AI models locally on your computer with minimal setup and maximum performance.

🚀 Step 1: Installing Ollama

Download and Install Ollama

For macOS and Linux:

curl -fsSL https://ollama.com/install.sh | sh

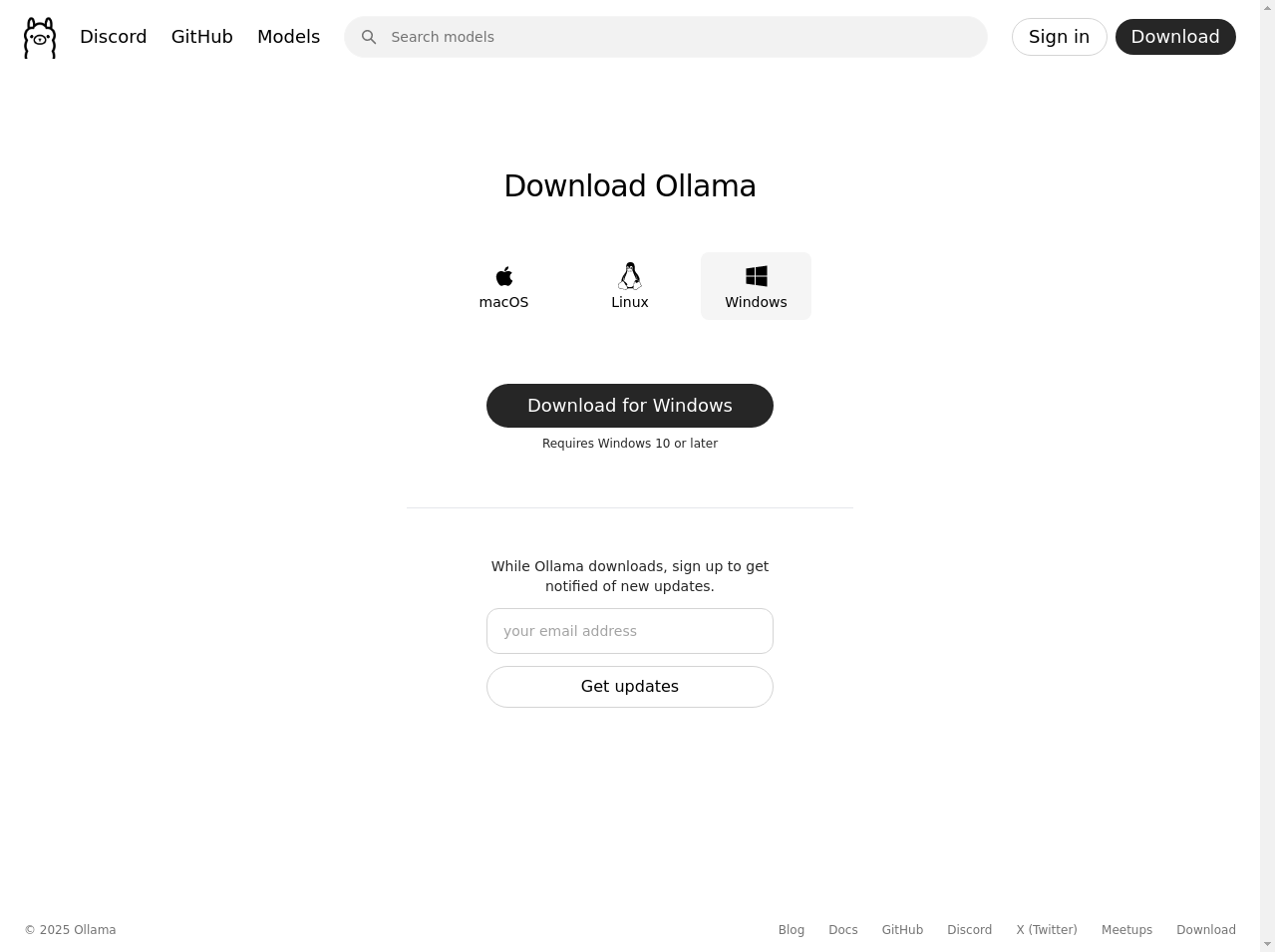

For Windows:

- Go to https://ollama.com/download

- Download the Windows installer

- Run the installer and follow the installation wizard

Verify Installation

After installation, open your terminal or command prompt and run:

ollama --version

You should see the Ollama version information if the installation was successful.

$ ollama --version

ollama version is 0.9.0

🔍 Step 2: Download Chinda LLM 4B Model

Downloading the Model

Once Ollama is installed, you can download Chinda LLM 4B with a simple command:

ollama pull iapp/chinda-qwen3-4b

The model is approximately 2.5GB in size and will take some time to download depending on your internet speed. You'll see a progress bar showing the download status.

$ ollama pull iapp/chinda-qwen3-4b

pulling manifest

pulling f2c299c8384c: 100% ▕██████████████████▏ 2.5 GB

pulling 62fbfd9ed093: 100% ▕██████████████████▏ 182 B

pulling 70a7c2ca54f5: 100% ▕██████████████████▏ 159 B

pulling c79654219fbe: 100% ▕██████████████████▏ 74 B

pulling e8fb2837968f: 100% ▕██████████████████▏ 487 B

verifying sha256 digest

writing manifest

success

Verify Model Download

To check if the model was downloaded successfully:

ollama list

You should see chinda-qwen3-4b:latest in the list of available models.

$ ollama list

NAME ID SIZE MODIFIED

iapp/chinda-qwen3-4b:latest f66773e50693 2.5 GB 35 seconds ago

⚙️ Step 3: Basic Usage

Start Chatting with Chinda LLM

To start an interactive chat session with Chinda LLM 4B:

ollama run iapp/chinda-qwen3-4b

This will start an interactive chat session where you can type your questions in Thai and get responses immediately.

Example Conversation

ollama run iapp/chinda-qwen3-4b

>>> สวัสดีครับ ช่วยอธิบายเกี่ยวกับปัญญาประดิษฐ์ให้ฟังหน่อย

# Chinda LLM will respond in Thai explaining artificial intelligence

>>> /bye # Type this to exit the chat

Single Question Mode

If you want to ask a single question without entering chat mode:

ollama run iapp/chinda-qwen3-4b "อธิบายเกี่ยวกับปัญญาประดิษฐ์ให้ฟังหน่อย"

🌐 Step 4: API Server Usage

Starting the Ollama API Server

For developers who want to integrate Chinda LLM into their applications:

ollama serve

This starts the Ollama API server on http://localhost:11434

Using the API with curl

Basic API Call:

curl http://localhost:11434/api/generate -d '{

"model": "iapp/chinda-qwen3-4b",

"prompt": "สวัสดีครับ",

"stream": false

}'

Chat API Call:

curl http://localhost:11434/api/chat -d '{

"model": "iapp/chinda-qwen3-4b",

"messages": [

{

"role": "user",

"content": "อธิบายเกี่ยวกับปัญญาประดิษฐ์ให้ฟังหน่อย"

}

]

}'

Using with Python

import requests

import json

def chat_with_chinda(message):

url = "http://localhost:11434/api/generate"

data = {

"model": "iapp/chinda-qwen3-4b",

"prompt": message,

"stream": False

}

response = requests.post(url, json=data)

return response.json()["response"]

# Example usage

response = chat_with_chinda("สวัสดีครับ")

print(response)

💬 Step 5: Advanced Usage

Setting Custom Parameters

You can customize the model's behavior by setting parameters:

ollama run iapp/chinda-qwen3-4b

>>> /set parameter temperature 0.7

>>> /set parameter top_p 0.9

>>> /set parameter top_k 40

Model Information

To see detailed information about the model:

ollama show iapp/chinda-qwen3-4b

Usage Examples

Let's test with different types of questions:

Mathematics Question:

ollama run iapp/chinda-qwen3-4b "ช่วยแก้สมการ 2x + 5 = 15 ให้หน่อย"

Document Writing:

ollama run iapp/chinda-qwen3-4b "ช่วยเขียนจดหมายขอบคุณลูกค้าให้หน่อย"

Programming Help:

ollama run iapp/chinda-qwen3-4b "เขียนฟังก์ชัน Python สำหรับหาเลขคู่ให้หน่อย"

🔧 Troubleshooting

Common Issues and Solutions

Issue: Model Not Found

Error: pull model manifest: file does not exist

Solution: Make sure you typed the model name correctly:

ollama pull iapp/chinda-qwen3-4b

Issue: Ollama Service Not Running

Error: could not connect to ollama app, is it running?

Solution: Start the Ollama service:

ollama serve

Issue: Out of Memory

If you encounter memory issues, you can try:

ollama run iapp/chinda-qwen3-4b --low-memory

Performance Tips

- Close unnecessary applications to free up memory

- Use SSD storage for better model loading speed

- Ensure sufficient RAM (recommended: 8GB or more)

🎯 Suitable Use Cases

✅ What Chinda LLM 4B Does Well

- Document Drafting - Help write letters, articles, or various documents in Thai

- RAG (Retrieval-Augmented Generation) - Answer questions from provided documents

- Mathematics Questions - Solve math problems of various levels

- Programming - Help write code and explain functionality in Thai

- Language Translation - Translate between Thai and English

- Creative Writing - Generate stories, poems, or creative content

- Educational Content - Explain concepts and provide learning materials

❌ Limitations to Be Aware Of

Don't ask for facts without context such as:

- Latest news events

- Specific statistical data

- Information about specific people or organizations

- Real-time information

Since Chinda LLM 4B is a 4B parameter model, it may generate incorrect information (hallucination) when asked about specific facts.

🚀 Model Specifications

- Size: 2.5GB (quantized)

- Context Window: 40K tokens

- Architecture: Based on Qwen3-4B, optimized for Thai language

- Performance: Fast inference on consumer hardware

- Memory Requirements: Minimum 4GB RAM, recommended 8GB+

🔮 What's Coming

The iApp Technology team is developing a new, larger model that will be able to answer factual questions more accurately. It is expected to be released soon.

📚 Additional Resources

Quick Reference Commands

# Install Ollama

curl -fsSL https://ollama.com/install.sh | sh

# Download Chinda LLM 4B

ollama pull iapp/chinda-qwen3-4b

# Start chatting

ollama run iapp/chinda-qwen3-4b

# List installed models

ollama list

# Remove a model

ollama rm iapp/chinda-qwen3-4b

# Start API server

ollama serve

Links and Resources

- 🌐 Demo: https://chindax.iapp.co.th (Choose ChindaLLM 4b)

- 📦 Model Download: https://huggingface.co/iapp/chinda-qwen3-4b

- 🐋 Ollama: https://ollama.com/iapp/chinda-qwen3-4b

- 🏠 Homepage: https://iapp.co.th/products/chinda-opensource-llm

- 📄 License: Apache 2.0 (Available for both commercial and personal use)

🎉 Summary

Chinda LLM 4B with Ollama is an excellent choice for those who want to use Thai AI models locally with maximum performance and minimal setup. The command-line interface provides powerful features for both casual users and developers.

Key advantages of using Ollama:

- Fast performance with optimized inference

- Easy installation with simple commands

- API support for integration into applications

- No internet required after initial download

- Privacy-focused - all processing happens locally

Start using Chinda LLM 4B today and experience the power of Thai AI running locally on your machine!

Built with ❤️ by iApp Technology Team - For Thai AI Development